|

Taskflow

3.2.0-Master-Branch

|

|

Taskflow

3.2.0-Master-Branch

|

We study a text processing pipeline that finds the most frequent character of each string from an input source. Parallelism exhibits in the form of a three-stage pipeline that transforms the input string to a final pair type.

Given an input vector of strings, we want to compute the most frequent character for each string using a series of transform operations. For example:

We decompose the algorithm into three stages:

std::string from the input vectorstd::unorder_map<char, size_t> frequency map from the stringstd::pair<char, size_t> from the mapThe first and the third stages process inputs and generate results in serial, and the second stage can run in parallel. The algorithm is a perfect fit to pipeline parallelism, as different stages can overlap with each other in time across parallel lines.

We create a pipeline of three pipes (stages) and two parallel lines to solve the problem. The number of parallel lines is a tunable parameter. In most cases, we can just use std::thread::hardware_concurrency as the line count. The first pipe reads an input string from the vector in order, the second pipe transforms the input string from the first pipe to a frequency map in parallel, and the third pipe reduces the frequency map to find the most frequent character. The overall implementation is shown below:

Taskflow does not provide any data abstraction to perform pipeline scheduling, but give users full control over data management in their applications. In this example, we create an one-dimensional buffer of a std::variant data type to store the output of each pipe in a uniform storage:

The first pipe reads one string and puts it in the corresponding entry at the buffer, mybuffer[pf.line()]. Since we read in each string in order, we declare the pipe as a serial type:

The second pipe needs to get the input string from the previous pipe and then transforms that input string into a frequency map that records the occurrence of each character in the string. As multiple transforms can operate simultaneously, we declare the pipe as a parallel type:

Similarly, the third pipe needs to get the input frequency map from the previous pipe and then reduces the result to find the most frequent character. We may not need to store the result in the buffer but other places defined by the application (e.g., an output file). As we want to output the result in the same order as the input, we declare the pipe as a serial type:

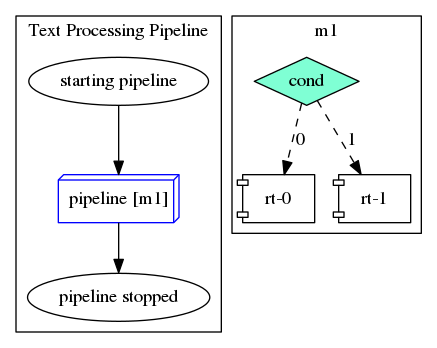

To build up the taskflow graph for the pipeline, we create a module task out of the pipeline structure and connect it with two tasks that outputs messages before and after the pipeline:

Finally, we submit the taskflow to the execution and run it once:

As the second stage is a parallel pipe, the output may interleave. One possible result is shown below:

We can see seven outputs at the third stage that show the most frequent character for each of the seven strings in order (a:2, d:4, e:3, z:2, j:4, i:4, k:3). The taskflow graph of this pipeline workload is shown below: