|

Taskflow

3.2.0-Master-Branch

|

|

Taskflow

3.2.0-Master-Branch

|

This chapters discusses how to limit the concurrency or the maximum number of workers in subgraphs of a taskflow.

Taskflow provides a mechanism, tf::Semaphore, for you to limit the maximum concurrency in a section of tasks. You can let a task acquire/release one or multiple semaphores before/after executing its work. A task can acquire and release a semaphore, or just acquire or just release it. A tf::Semaphore object starts with an initial count. As long as that count is above 0, tasks can acquire the semaphore and do their work. If the count is 0 or less, a task trying to acquire the semaphore will not run but goes to a waiting list of that semaphore. When the semaphore is released by another task, it reschedules all tasks on that waiting list.

The above example creates five tasks with no dependencies between them. Under normal circumstances, the five tasks would be executed concurrently. However, this example has a semaphore with initial count 1, and all tasks need to acquire that semaphore before running and release that semaphore after they are done. This organization limits the number of concurrently running tasks to only one. One possible output is shown below:

For the same example above, we can limit the semaphore concurrency to another value different from 1, say 3, which will limit only three workers to run the five tasks, A, B, C, D, and E.

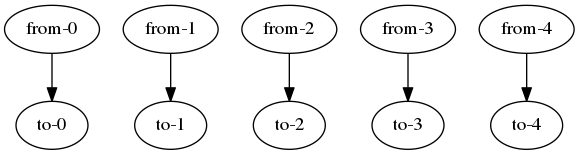

Semaphores are powerful for limiting the maximum concurrency of not only a section of tasks but also different sections of tasks. Specifically, you can have one task acquire a semaphore and have another task release that semaphore to impose concurrency on subgraphs of tasks. The following example serializes the execution of five pairs of tasks using a semaphore rather than explicit dependencies.

Without semaphores, each pair of tasks, e.g., from-0 -> to-0, will run independently and concurrently. However, the program forces each from task to acquire the semaphore before running its work and not to release it until its paired to task is done. This constraint forces each pair of tasks to run sequentially, while the order of which pair runs first is up to the scheduler.

tf::CriticalSection is a wrapper over tf::Semaphore specialized for limiting the maximum concurrency over a section of tasks. A critical section starts with an initial count representing that limit. When a task is added to the critical section, the task acquires and releases the semaphore internal to the critical section. This method tf::CriticalSection::add automatically calls tf::Task::acquire and tf::Task::release for each task added to the critical section. The following example creates a critical section of two workers to run five tasks in the critical section.

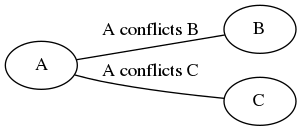

One important application of tf::Semaphore is conflict-aware scheduling using a conflict graph. A conflict graph is a undirected graph where each vertex represents a task and each edge represents a conflict between a pair of tasks. When a task conflicts with another task, they cannot run together. Consider the conflict graph below, task A conflicts with task B and task C (and vice versa), meaning that A cannot run together with B and C whereas B and C can run together.

We can create one semaphore of one concurrency for each edge in the conflict graph and let the two tasks of that edge acquire the semaphore. This organization forces the two tasks to not run concurrently.

The above code can be rewritten with tf::CriticalSection for simplicity, as shown below: