|

Taskflow

3.2.0-Master-Branch

|

|

Taskflow

3.2.0-Master-Branch

|

Taskflow supports SYCL, a general-purpose heterogeneous programming model, to program heterogeneous tasks in a single-source C++ environment. This chapter discusses how to write SYCL C++ kernel code with Taskflow based on SYCL 2020 Specification.

You need to include the header file, taskflow/sycl/syclflow.hpp, for using tf::syclFlow.

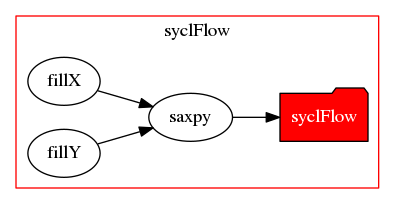

Taskflow introduces a task graph-based programming model, tf::syclFlow, to program SYCL tasks and their dependencies. A syclFlow is a task in a taskflow and is associated with a SYCL queue to execute kernels on a SYCL device. To create a syclFlow task, emplace a callable with an argument of type tf::syclFlow and associate it with a SYCL queue. The following example (saxpy.cpp) implements the canonical saxpy (A·X Plus Y) task graph using tf::syclFlow.

Debrief:

X with 1.0f Y with 2.0f tf::syclFlow is a lightweight task graph-based programming layer atop SYCL. We do not expend yet another effort on simplifying kernel programming but focus on tasking SYCL operations and their dependencies. This organization lets users fully take advantage of SYCL features that are commensurate with their domain knowledge, while leaving difficult task parallelism details to Taskflow.

Use DPC++ clang to compile a syclFlow program:

Please visit the page Compile Taskflow with SYCL for more details.

tf::syclFlow provides a set of methods for creating tasks to perform common memory operations, such as copy, set, and fill, on memory area pointed to by unified shared memory (USM) pointers. The following example creates a syclFlow task of two copy operations and one fill operation that set the first N/2 elements in the vector to -1.

Both tf::syclFlow::copy and tf::syclFlow::fill operate on typed data. You can use tf::syclFlow::memcpy and tf::syclFlow::memset to operate on untyped data (i.e., array of bytes).

SYCL allows a simple execution model in which a kernel is invoked over an N-dimensional index space defined by sycl::range<N>, where N is one, two or three. Each work item in such a kernel executes independently across a set of partitioned work groups. tf::syclFlow::parallel_for defines several variants to create a kernel task. The following variant pairs up a sycl::range and a sycl::id to set each element in data to 1.0f when it is not necessary to query the global range of the index space being executed across.

As the same example, the following variant enables low-level functionality of work items and work groups using sycl::nd_range and sycl::nd_item. This becomes valuable when an execution requires groups of work items that communicate and synchronize.

All the kernel methods defined in the SYCL queue are applicable for tf::syclFlow::parallel_for.

SYCL provides a way to encapsulate a device-side operation and all its data and event dependencies in a single command group function object. The function object accepts an argument of command group handler constructed by the SYCL runtime. Command group handler is the heart of SYCL programming as it defines pretty much all kernel-related methods, including submission, execution, and synchronization. You can directly create a SYCL task from a command group function object using tf::syclFlow::on.

By default, the executor offloads and executes the syclFlow once. When a syclFlow is being executed, its task graph will be materialized by the Taskflow runtime and submitted to its associated SYCL queue in a topological order of task dependencies defined in that graph. You can explicitly execute a syclFlow using different offload methods:

After you offload a syclFlow, it is considered executed, and the executor will not run an offloaded syclFlow after leaving the syclFlow task callable. On the other hand, if a syclFlow is not offloaded, the executor runs it once. For example, the following two versions represent the same execution logic.

You can update a SYCL task from an offloaded syclFlow and rebind it to another task type. For example, you can rebind a memory operation task to a parallel-for kernel task from an offloaded syclFlow and vice versa.

Each method of task creation in tf::syclFlow has a corresponding method of rebinding a task to that task type (e.g., tf::syclFlow::on and tf::syclFlow::rebind_on, tf::syclFlow::parallel_for and tf::syclFlow::parallel_for).

You can use tf::syclFlow in a standalone environment without going through tf::Taskflow and offloads it to a SYCL device from the caller thread. All the tasking methods we have discussed so far apply to the standalone use.